Annual Monitoring is central to the quality arrangements at all institutions. It is the process that serves as a focus for analysing and responding to a range of inter-related quantitative and qualitative evidence.

This project focused on the use of progression and retention data used in the annual monitoring processes within Scottish higher education institutions (HEIs). Our work helped us identify common challenges and potential solutions, which draw on the range of imaginative and effective practice highlighted by our sector colleagues. Our top tips include aspects of annual monitoring data and practice outwith retention and progression. We collated examples of practice that you will find valuable for:

Sector-wide activity

- exploring different ways of approaching common challenges

- inspiring colleagues with examples of effective practice from across the sector

- liaising with sector colleagues to understand what’s worked for them.

Explore top tips through examples of practice

Datasets: accuracy and relevance

Possible solutions

- Develop central repository as ‘one truth’ resource

- Explain reasons for apparent discrepancies between central and local data

- ‘Freeze’ live data for the purposes of Annual Monitoring Reporting (AMR) and advise report authors on ‘census date’ and rationale

- Agree and share institutional definitions for progression and retention to fit the institutional context.

Examples of institutional practice

- Dashboards as single source of evidence

- Guidance to report authors on data cut-off

- Progression and retention definitions to complement HESA definition

- Internal non-continuation indicator

- Analysing on-time completions.

Dashboards as single source of evidence

The University of the West of Scotland has developed a range of dashboards to support:

- annual monitoring

- programme portfolio review

- corporate Key Performance Indicator achievement.

The University shared the data via an online system and is available for all staff to access. This has become the single source of truth in terms of data and evidence for UWS.

Guidance to report authors on data cut-off

QMU’s statistical appendices for Annual Monitoring are generated from the data held in its Student Records System. The usual cut off point is around 21 September when the Higher Education Statistics Agency (HESA) Student Return is committed. This deadline allows the University to capture results for the full academic cycle following the semester two resit Exam Boards. When the data is sent out, it is explained to staff that there might be a mismatch with their locally held records. For example, a student withdrawal that is known to the Programme Leader, but has not filtered through to Registry yet, will not be captured in the statistics. Staff are encouraged to submit any queries about the data to the Management Information Officer to resolve misunderstandings and inconsistencies.

Progression and retention data to complement HESA definition

The Higher Education Statistics Agency’s measure is non-continuation. This has a very specific definition and the University of Edinburgh uses it as a standalone measure. In addition to this the University has procedures in place for progression and retention as follows:

- Progression is broadly whether a student has been granted leave to move from one year to the next year of a programme.

- Retention is whether a student has returned for a further year of study. Note: a student can progress but not return and a student can be retained but not progress.

Internal non-continuation indicator

- curriculum portfolio

- programme structures

- non-linear learner journeys of a significant proportion of the student population.

Analysing on-time completions

The Conservatoire reviews Higher Education Statistics Agency (HESA) data, but mostly review its own data, which provides a finer level of detail. On-time completions are analysed, along with all instances of students who did not complete on-time, to identify trends. Last year the Conservatoire began analysing completions within two plus years, as more students take a year or two of suspension (as allowed by our regulations) due to health or personal circumstances.

Datasets: presentation and access

Possible solutions

- Agree which data are most important

- Use colour-coding/simple visual presentation to manage large datasets

- Use benchmarked data; consider trends within and/or outside programme

- Avoid overreliance on quantitative data

- Triangulate leavers’ reports with the wider qualitative dataset

- Recognise the value of informally sourced data and staff knowledge of their student population

- Be careful not to discount small datasets on grounds that they are not meaningful

- Incorporate consideration of learning analytics

- Consider the advantages of data dashboards (filtering, comparing trends, improving accessibility and visibility)

- Use ‘at a glance’ spreadsheets

Examples of institutional practice

- Data dashboard to make data visually striking

- ‘Tartan rug’ – visual representation of student satisfaction

- Online reporting

- Using benchmarked data

- Working with small datasets to improve the student experience

- Placing small datasets in the wider context.

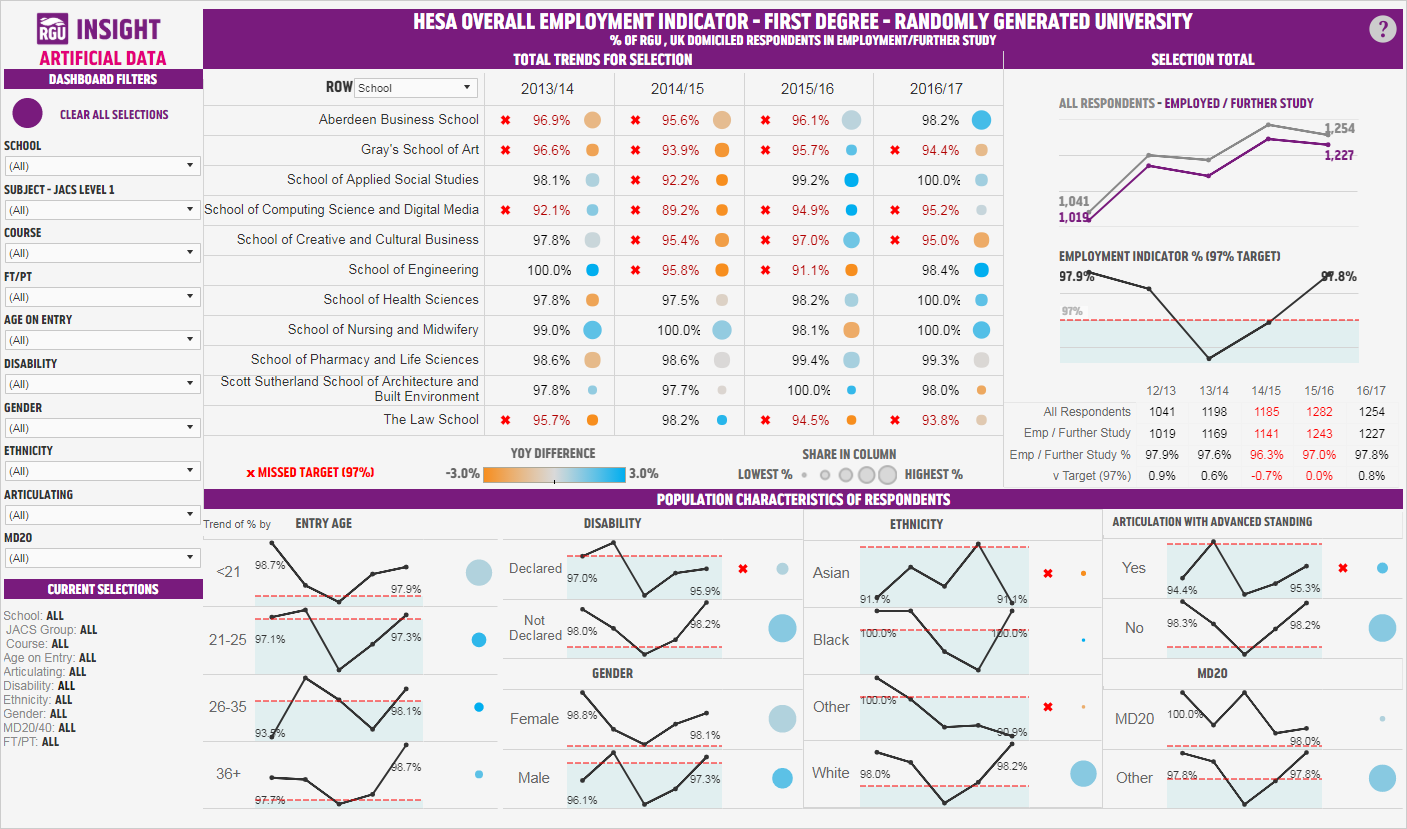

Data dashboards - making data visually striking

RGU dashboards use colour coding. One feature of this is to show, immediately, how module performance compares with institutional thresholds. Year on Year trend data shows improvement or decline, using coloured bubbles to present the information in a way that is prominent. This offers a high level of insight before detailed consideration of the data is undertaken. Another key feature is that the performance of groups, including certain key protected characteristics, is clearly presented. The dashboards, which also incorporate tables and graphs, are interactive, allowing users to filter the data to support their evaluation for Annual Monitoring Reporting.

Anonymised dashboard

‘Tartan rug’ – visual representation of student satisfaction

St Andrews has developed a ‘tartan rug’ system to categorise student feedback gathered through module evaluation. The rug shows student satisfaction for module evaluation questions benchmarked against the University mean, using a three-colour system. The colours do not point to an issue, but where satisfaction falls below the University mean, it serves as a trigger for discussion and intervention. The University has recently developed the rug in response to user feedback to include the number of respondents as a percentage of the module cohort. The Academic Monitoring Group considers the rug and provides feedback to the Schools. This includes requests for further information on high performing modules (where good practice can be shared), as well as areas for investigation. Schools are required to reflect within the Annual Monitoring Reporting process on their engagement with the rug.

Online reporting

The University introduced online programme level Annual Monitoring Report (AMR) in 2018. The online template takes the form of a SharePoint document, which is personalised with links to relevant data on:

- student success

- student satisfaction

- student destinations

- programme health.

All programme leaders have access to high level data and the option to view more detailed information, including breakdown by protected characteristics. Programme leaders also draw on module reports, which include detailed information on pass rate and progression. Whilst these reports are helpful, it can be challenging to interpret data for larger modules, which span multiple programmes. Programme leaders need to be mindful of the place of each module within their curriculum, for example whether it is core or optional. An important advantage of the online system is that it is more resource efficient for data users. It also improves oversight, reporting and sharing of practice across UWS on many levels. Some programme leaders use the system as a repository for other data relevant to AMR, as there is the facility to upload appendices. Reports are not currently provided to students through this system. However, the technology is in place to facilitate student access.

Using benchmarked data

Colleges at Edinburgh provide benchmarked data to support Schools’ preparations for Annual Monitoring Report. The data includes information on performance within the Schools benchmarked against the College. Each College may implement benchmarking to suit the local context, so could relate to retention and progression, but this will depend on interpretation of ‘performance’.

Working with small datasets to improve the student experience

Several years ago, award outcomes for the BEd were noted to be lower for students with disabilities. Through their engagement with the data and team discussion, staff identified that there might be a need for additional support. As the numbers concerned were very small, it was determined that a blanket intervention would not be appropriate or helpful. Instead, staff continued to support students on an individual basis around approaches that might help those with disabilities achieve their full potential. Award outcomes have improved to the point where there is no difference in outcomes for this group since the analysis of the data was undertaken. While it is difficult to link this improvement directly to Annual Monitoring Reporting, the engagement with the data through that process undoubtedly heightened awareness of challenges and the importance of early action to support students.

Placing small datasets in the wider context

The Conservatoire has a student population of around 1,200 degree students. This means that datasets for progression and retention are small, especially when broken down by student characteristics. The analysis undertaken for Annual Monitoring Reporting has proved valuable when working with small numbers. It has been possible to determine statistical significance, which gives credibility to the process and helps target activity. The Analyst has oversight of a much broader suite of information, within which data is broken down in considerable detail. To give one illustrative example, analysis has been undertaken by gender and musical instrument, for example female cellist. Analysing data to this level helps build up a rich bank of information. Staff can use this data to benchmark their own programme data and test hypotheses with support from the Analyst.

Annual monitoring process design

Possible solutions

- Change timing

- Avoid duplication across levels of AMR

- Introduce reporting by exception

- Provide constructive feedback to report authors to challenge and stimulate discussions

- Promote engagement throughout the year.

Examples of Institutional practice

- Streamlining reporting and altering reporting deadlines

- Changes to reporting deadlines and discussion format

- Reporting levels as building blocks

- Quality calendar

- Using mid-module feedback to complement AMR

- Reporting by exception

- Pre-populated template.

Streamlining reporting and altering reporting deadlines

QMU introduced a new shorter Annual Monitoring Report (AMR) template in 2018. Within the template, authors are asked to identify three aspects of good practice, three areas for development, and three areas to bring to the attention of University committees. The submission date was also brought forward from October to June, as programme leaders considered this to be better aligned with the planning cycle for the new academic year.

There is an expectation that teams revisit and resubmit the templates in October following receipt of:

- NSS (National Student Survey) data

- External Examiner

- Reports performance indicators from Registry.

In some cases, the consideration of the extra data has resulted in amendments to the June submission.

Changes to reporting deadlines and discussion format

At Heriot-Watt University several revisions were made to the Annual Monitoring Reporting. The changes were implemented to improve the process and increase its value (particularly for Schools). One key change was moving the deadline submission date by six months from December to June. This allows the most recent academic session to be reported upon, thus making the process less retrospective. Additionally, separate School annual discussion meetings (with School Management Teams) were replaced with a single joint dissemination event. This allowed engagement of the wider University community (academic and professional services staff).

Reporting levels as building blocks

At Abertay University an agreed dataset is sent to Schools where programme leaders write a programme report. This report informs the Division report, which in turn, informs the School report. The University believes the building of reports from programme to Division to School works well and has significantly reduced duplication of effort.

Quality calendar

SRUC has produced a colour-coded quality calendar, which supports staff engagement with AMR (Annual Monitoring Report) and other quality processes. The calendar sets out key dates across the academic year for collation, receipt and evaluation of data, including progression and retention data. It also provides information on the timing of assessment and key committee and assessment board meetings. Importantly, the calendar links to the on-going review and implementation of programme and departmental quality enhancement plans. The plans are informed by AMR and subject to regular review at intervals of no more than three months. This approach provides a structured framework for on-going reflection and engagement, meaning that AMR actions remain ‘live’ throughout the full academic year.

The calendar is provided in hard copy to all academic colleagues, meaning that it is visible across SRUC’s campus sites. Academic staff also receive email reminders of key deadlines. The calendar has been well received as a useful resource to help staff meet deadlines and understand the relationship between quality processes and enhancement. Early discussions are underway to develop the format of the calendar, for example through an interactive online.

SRUC - Quality 2018-19

Publication date: 27 Aug 2019

Using mid-module feedback to complement AMR

Progression and retention data considered through AMR (Annual Monitoring Report) in 2017-18 highlighted issues related to student performance on modules contributing to the BA (Hons) Business Management. In response, the programme team introduced mid-module feedback opportunities to identify potential issues rather than waiting until the end point through module evaluations. Additionally, Peer Assisted Learning was introduced for some of the more challenging modules. The data relating to student performance at module level was useful to inform this, as it allowed the team to see how students performed as a cohort in comparison with other modules they were undertaking in the same semester.

Recent work on the correlation between attending PALS (Peer Assisted Learning Scheme) sessions and assessment marks for one of the modules identified shows that students who attended the PALS sessions performed well in both assessments. Students have also provided more positive feedback about their experience of some modules following the introduction of the mid-module evaluation. Whilst it is too early to see the overall impact on progression and retention in 2018-19, discussions with students suggest that the intervention has had an overall positive impact.

Reporting by exception

- areas of good practice

- actions to address areas for further development.

Pre-populated template

The University’s Programme Monitoring Reports (PMR) system was developed by IT, Strategic Planning and Quality. The system was setup as an online form, personalised with links to relevant data for each programme, including:

- progression

- retention

- student satisfaction (NSS)

- DLHE/graduate outcomes

- enrolment

- applications etc.

This means the reports are more reflective and evidence based. Members of staff do not need to find and translate the data, as it is already linked for them to reflect on. This made reporting more consistent as it had a clear focus on actions. The online approach also allows for easier tracking of results and the identification of themes over time.

Annual monitoring as a vehicle for change

Possible solutions

- Understand the relationship between AMR and other processes - avoid viewing AMR in isolation

- Accept that enhancements arising from AMR interventions can take time to come to fruition

- Recognise and make explicit to stakeholders the benefit the wider benefits of AMR as a vehicle to stimulate discussion and help place data in context

- Create opportunities for staff and student dialogue

- Consider approaches to sharing data with students

- Make data available to all staff to facilitate comparison

- Consider the use of module thresholds to support progression and retention

- Introduce targeted questions that relate to institutional priorities

- Electronic stakeholder newsletters.

Examples of institutional practice

- Annual Monitoring Reporting, Strategic and Outcome Agreement Planning

- Learner analytic and monitoring engagement

- Cross-institutional retention task force

- Integrating Learning Analytics

- Using dialogue meetings to explore possible interventions

- Annual Enhancement and Monitoring event and newsletter

- Data sharing with students

- Online staff newsletter

- Annual dissemination event

- Question of the year

- Using module thresholds to support interventions.

Annual Monitoring Reporting, Strategic and Outcome Agreeing Planning

Annual Monitoring Reporting (AMR) supports institution-level planning. The University Committee for Learning and Teaching and the University Committee for Research and Innovation consider this through the AMR Summary Report. The report informs the Committee’s forward agenda for strategic developments in learning and teaching, and research. The links with the Scottish Funding Council Outcome Agreement through our SFC Quality Report, which features an AMR overview.

Learner analytics and monitoring engagement

A 2017-18 School Annual Report found that retention rates had improved due to the implementation of a student retention initiative that had arisen from the recommendations of the previous year’s Report. However, in capturing learner analytics data for the Programme Annual report, it identified that the withdrawal rate remained consistent for the Term 2 re-sits for some programmes. This was against the overall upward trend. In response, the School and Student Services team prioritised this area to monitor engagement and put support in place for students identified as at risk.

A School investigated a disappointing average pass rate for one Division. This was comparatively low at the main diet in the previous year (against the University average) but recovered after the re-sit diet. However, in the programme-level analysis of the data in 2017-18, it was found that there were areas of both over-performance and under-performance. This was reflected upon in the Divisional Annual Reports and measures were proposed to support teaching staff and improve student performance within the underperforming areas.

This example of practice complements Annual Monitoring Reporting.

Cross-institutional retention task force

The University has a cross-Institutional retention task force who deal with retention and progression with representation from all Schools, Registry, Academic services, IT, Student Recruitment and Admissions. In the last year the task force have reviewed correspondence being sent to students who have become disengaged from their studies. The aim is to provide more support and to be clearer as to what students can do to address their situation. Good practice in supporting students and developing procedures for “early interventions” has been shared between the group. The group has also championed attendance monitoring at lectures with a view to this providing an early warning of disengagement. They are also involved in developing a learning analytics policy to support the future use of learning analytics to support disengaged students.

This example of practice complements Annual Monitoring Reporting.

Integrating learning analytics

The University is embedding learning analytics activity into its oversight of retention and progression. Examples include:

- integrating learning analytics into online course design

- working with programme leaders to design student engagement dashboards

- developing a student assessment and feedback planning tool.

This example of practice complements Annual Monitoring Reporting.

Using dialogue meetings to explore possible interventions

Annual Monitoring Reporting (AMR) dialogue meetings are held annually in October. The meetings are conducted by video-conference to facilitate participation from each of SRUC’s six campus sites. The video-conferences are held over two days, during which there are eight cross-campus meetings, grouped by cognate subject areas. Following the dialogue meetings, a synopsis of key themes is provided to the Learning and Teaching Committee.

The meetings take the form of a peer scrutiny event. A review panel with around eight participants is established to develop questions in advance, for the participating subject areas. Typical attendance at each of the meetings is between 15 and 20 participants. Review panel membership includes academic representation, staff from the SRUC quality and learning engagement teams, and student representation (usually the Student Association Vice President). Students from participating subject areas currently contribute through their involvement in quality processes that underpin AMR. Going forward, SRUC is considering student involvement as members of the participating subject area group. The involvement of external reviewers is also under consideration.

The meetings provide an important structured opportunity for staff and students from the six campus sites to reflect on the AMR outcomes. This ties in well with the continuous development of quality procedures as vehicles for reflection and enhancement at SRUC. Topics covered at the meetings are wide ranging and include discussion of progression and retention initiatives informed by evaluation of relevant data.

During the 2016-17 annual dialogue meetings, the impact of poor mental health on the retention and attainment of students was discussed. This resulted in an ongoing initiative providing training for over 100 staff and students in mental health first aid and the introduction of Therapet Days at each campus. Some staff dogs are now Therapet accredited and able to provide support on a weekly basis. Further to this, SRUC and SRUCSA in partnership have accessed and embraced the SANE Black Dog campaign, which provides a confidential out of hours support line for students (and staff with concerns about students), with analytics provided to SRUC to help shape future wellbeing initiatives. This is being used to inform the SRUC Health and Wellbeing Strategy for both staff and students.

Annual Enhancement and Monitoring event and newsletter

At UWS students take part in the annual institution-level Enhancement and Monitoring (EAM) Event. Annual Monitoring Reporting is the focus within the EAM, which also provides opportunity for updates on key institutional developments through a five-minute thesis approach. AMR discussions are conducted in a world-café format. Typically, the Assistant Deans move around the tables to present key highlights and challenges identified through AMR for their School. Then discussion at the tables informs future enhancement. Conversations can be wide-ranging but are likely to include consideration of progression and retention. Key themes are captured in the EAM Newsletter, which is provided to all staff and students.

Data sharing with students

Dundee University Students’ Association (DUSA) and the University of Dundee have been working in partnership to explore the possibility of a data sharing agreement to support evidence-based enhancement. This activity is progressing within the wider context of Dundee’s Student Partnership Agreement (SPA). The SPA is used to identify the development of student welfare and pastoral support as a key priority for 2018-19. Related performance indicators within the SPA include:

- swipe card entry to DUSA

- uptake of support and advice from DUSA

- progression and retention statistics broken down by student groups.

The agreement would provide for the sharing of selected data that is currently held separately by the University and DUSA. Under the proposals, University data would include aggregated attendance, retention, progression and attainment statistics. Data from DUSA would include results from the Student Matters Survey and Student-led Teaching Awards. The sharing of this data would enable users to triangulate information on progression and retention from an extended evidence base. The Student Matters Survey provides a rich source of qualitative information on factors affecting the student experience, including financial and housing considerations. If adopted, the data-sharing agreement could be used to good effect within Annual Monitoring Reporting (AMR). A further benefit would be increased student awareness of the AMR process and student contribution to preparing AMR reports.

Online staff newsletter

Edinburgh Napier’s online staff newsletter (The Bones) included, for the first time in 2019, a summary of key themes emerging from Annual Monitoring Reporting (AMR). The newsletter presents information in a format that is engaging, accessible and informative. The AMR article explains to staff how AMR submissions are used for internal and external (ELIR) purposes. Examples of good practice are identified, as well as some areas where further work might be needed. The article reflects not just on the themes emerging from AMR, but also the process. This reflection includes identification of challenges of working with AMR data. Within the article staff are encouraged to continue AMR discussions through formal and informal channels. It is also emphasised to readers that the AMR process is ‘at the heart of the University’s enhancement culture’. The Bones is available to all staff and external audiences, on the University’s website. This means there is potential to stimulate positive engagement with AMR, including discussion around the use of data, across a wider readership than the immediate audience for reports.

Annual dissemination event

An annual dissemination event is held in October with the primary aim of promoting and sharing good practice. The Academic Monitoring Group draws up a list of around 12 potential topics for the event, based on scrutiny of School Annual Monitoring Reporting submissions. Directors of Teaching subsequently vote to narrow the list to five topics. Attendance at the event includes Deans of Teaching plus one other colleague from each of St Andrews’ 18 Schools. School Student Presidents are also invited to attend. The event takes the form of a series of five-minute presentations, after which the presenters facilitate group discussions. A brief report from the event is also prepared for the Learning and Teaching Committee, providing opportunity to share practice with a wider audience. Topics at the meetings are wide ranging and can relate to progression and retention initiatives. For example, there has been discussion around lecture attendance and the benefits for students of participating in timetabled learning experiences. There is good evidence of cross-School transfer of practice following dissemination events. One such enhancement being the adoption, in one School, of graduate exit interviews.

Question of the Year

The inclusion of a ‘Question of the Year’ in the report has proven to be an effective and valuable way to gather information on, and respond to, topical issues and/or areas for enhancement. Responses to the most recent question, related to the Careers Link role in academic Schools, were collated and shared with the Careers Centre. They will use this data to:

- structure training for the Careers Links

- identify areas of good practice and areas of concern

- review against St Andrew’s DLHE data to identify schools whose students need more in-depth support.

The 2019 iteration of the form includes a question on staff and student mental health and wellbeing. Responses will be shared with the University’s Mental Health Strategy Group.

Using module thresholds to support interventions

RGU has an 80% threshold for first diet module pass rate. A red cross on the dashboard denotes thresholds that have not been achieved. In cases like this an evaluative commentary is required within the Annual Monitoring Report. Importantly, a red cross does not denote a significant issue with delivery or other aspect of the module under consideration. Instead, it serves as a trigger for further interrogation of the data, and (where appropriate) identification of interventions to support student achievement.

Staff confidence in working with data

Possible solutions

- Adopt a team-based approach - Planners, Registry and IT colleagues can all provide valuable support from different perspectives

- Facilitate peer support - create space for dialogue

- Consider providing a dedicated staff resource to support engagement with data

- Pre-populate AMR templates with data

- Design templates to follow same order as dashboard

- Make best use of institutional written guidance and dashboard tips functionality.

Examples of institutional practice

- Data dashboard training

- Data champions

- Statistical Analyst appointment.

Data dashboard training

RGU held training sessions for staff users and Student Presidents following the launch of the data dashboard for Annual Monitoring Report (AMR) in 2018. These took the form of mandatory training, for staff, followed by optional drop-in sessions for those who required them. In addition to the training sessions, expert data owners from professional services provide on-going support. To help staff understand how the data can be used to support enhancement, they were encouraged to bring the AMR template to the training. The dashboard was designed for the purpose of AMR, so the data is presented in the order that commentary is entered within the AMR template. This structured approach supports engagement, as staff can view the relevant information in a logical order. Feedback from the training session was very positive, so it will be repeated again this year. This will benefit staff undertaking AMR for the first time, and as a refresher for more experienced staff. An unexpected benefit of the drop-in sessions was that staff were able to share their practice in using data, both within and across disciplinary teams. The involvement of Student Presidents in the sessions supports their engagement with discussion at committee meetings. They are also well placed to cascade information about using data through the student representative structure.

Data champions

Data champions have recently been appointed for most of the Schools at the University. They help to raise awareness of the information available on, and insight to be gained from the University data dashboards. They also provide support to colleagues who are using the data for Annual Monitoring Report (AMR) and other purposes. The champions have a specific remit for retention. More broadly, they are instrumental in promoting and facilitating staff engagement with the Enhancement Themes. The champions have varying prior experience of data analysis and need not be experts in all possible approaches. Meetings of the full group of champions provide an opportunity to share good practice and challenges and develop approaches across the University as a whole. Dundee also run dedicated Organisational Professional Development (OPD) workshops on using data under the auspices of the current Enhancement Theme. The impact of the workshops will be assessed as part of the University’s reporting on the Enhancement Theme evaluation.

Statistical Analyst appointment

The Conservatoire has appointed a Statistical Analyst with a broad remit that includes:

- engagement with a range of management information

- survey data

- league tables.

The Analyst provides data for Annual Monitoring Reporting (AMR), including progression and retention data. An accompanying report includes statistical interpretations highlighting whether the data is statistically significant. This report also includes a qualitative summary, which highlights areas for discussion and suggests possible factors that might have had an impact on numbers within the dataset. Programme teams can discuss the report with the Analyst to support their engagement with AMR data. The report is user-friendly and extracts key points for follow-up through AMR. Academic staff welcome the provision of data in this format. They also value the constructive challenge that arises through their dialogue with the Analyst.

Find out more about institutions' annual monitoring processes

- Abertay University | Not currently available online

- University of Aberdeen | Annual course and programme review

- University of Dundee | Policy and guidance on the annual review of taught provision

- Edinburgh Napier University | Annual Monitoring & Review (see section 2a)

- University of Edinburgh | Annual Monitoring, Review and Reporting

- Glasgow Caledonian University | Programme Monitoring (PDF, 0.38MB)

- Glasgow School of Art | Programme Monitoring and Annual Reporting Policy (PDF, 0.22MB)

- University of Glasgow | Annual Monitoring

- Heriot-Watt University | Annual Monitoring and Review

- University of the Highlands and Islands | Academic Standards and Quality Regulations 2018-19 Annual Quality Monitoring

- Open University in Scotland | Not currently available online

- Queen Margaret University | Annual Monitoring forms and guidance

- The Royal Conservatoire of Scotland | Quality Assurance Handbook (PDF, 1.23MB)

- Robert Gordon University | Academic Quality Handbook

- Scotland’s Rural College | Education manual (see section B Portfolio delivery, then B3 - Monitoring and Review)

- University of St Andrews | Annual Academic Monitoring

- University of Stirling | Review and Monitoring

- University of Strathclyde | Not currently available online

- University of the West of Scotland | Regulations for Programme and Module Approval, Monitoring and Internal Review

- You may find it helpful to use this sharing practice resource in tandem with the HE Data Landscape resource, which explains data sets commonly used in key quality processes, such as annual monitoring.

- If you are interested in using evidence to explore retention and progression, look at our key discussion topics booklet.